8 min read

Introduction: A New Chapter in AI-Generated Video

Until recently, video was the one domain AI hadn’t conquered — a complex format requiring not just image generation, but motion, depth, coherence, and cinematic logic.

But that chapter is over.

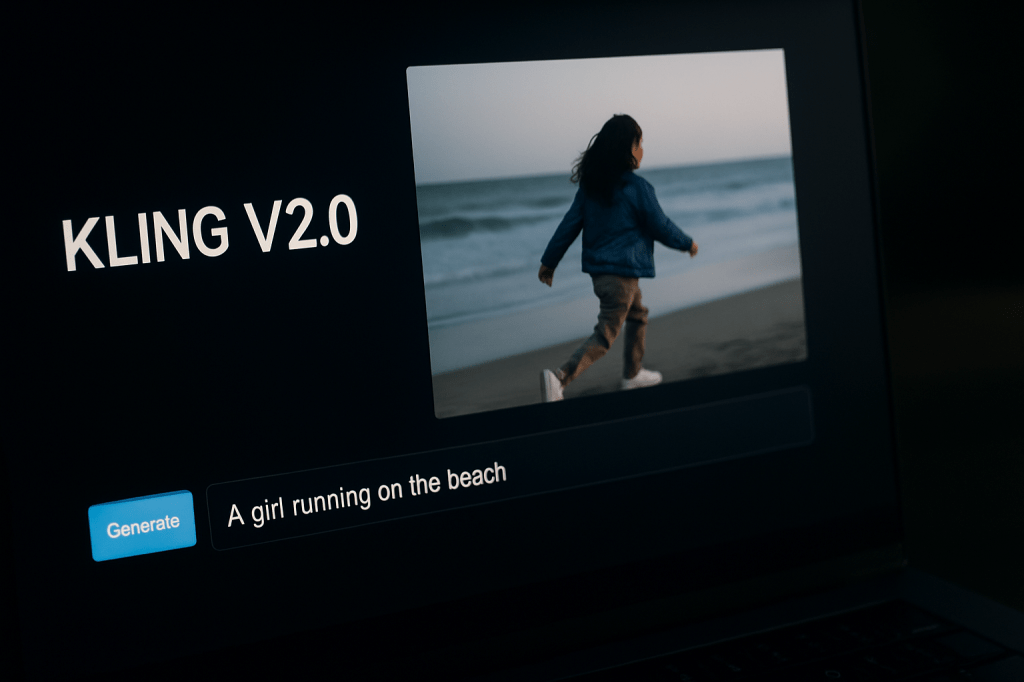

With the release of Kling V2.0, the AI video race just entered a new dimension.

Developed by Kuaishou, one of China’s largest video platforms, Kling isn’t just generating video — it’s directing it.

From fluid motion and complex camera angles to emotional storytelling, Kling V2.0 is pushing the boundaries of what AI can visualize — in full cinematic motion.

In this blog, we explore Kling’s capabilities, how it compares to its global rivals, and why this breakthrough could reshape everything from content creation to filmmaking, marketing, and beyond.

What Is Kling and Who Built It?

Kling is a state-of-the-art text-to-video AI model developed by Kuaishou Technology, the company behind one of China’s largest short video platforms (also called Kuaishou, second only to TikTok/Douyin).

Initially introduced as an internal generative research project, Kling evolved rapidly — and V2.0 marks a major leap forward, challenging global models like OpenAI’s Sora and Runway’s Gen-3 in both quality and accessibility.

Kuaishou isn’t just building a tool — it’s embedding Kling into its massive video ecosystem, signaling an aggressive move toward fully AI-powered video production pipelines.

Kling V2.0: Core Features and Capabilities

Here’s what makes Kling V2.0 a standout — not just in China, but globally:

1. Ultra-Realistic Motion and Physical Behavior

One of the hardest problems in AI video is generating natural, non-glitchy motion. Kling solves this by training on billions of real-world motion sequences, allowing characters to:

Walk, run, or jump with believable biomechanics Interact with props and environments naturally Perform multi-step actions without jitter or warping

Whether it’s a woman running across a beach or a robot assembling parts in a lab, Kling mimics motion with cinematic smoothness.

2. Cinematic Camera Work

Kling supports advanced camera logic, such as:

Dolly shots Pan and zooms Depth of field effects Slow motion

This isn’t just for show — it’s critical for emotional storytelling and creating videos that feel real, not robotic.

3. HD Output (1080p) with Scene Consistency

Unlike earlier AI models that capped at 512px or 720px with blurry transitions, Kling V2.0:

Outputs in 1080p resolution Maintains frame-to-frame consistency Handles complex lighting and reflections

Scenes feel grounded, spatially accurate, and visually coherent across multiple frames — which is critical for professional content use.

4. Prompt Sensitivity and Context Awareness

Kling’s natural language model has been upgraded for contextual understanding. For example:

“A boy holding a red balloon walks past a bookstore in the rain” → Kling understands the sequence, the environment, and emotional tone → It generates reflections on wet pavement, a dark storefront, and accurate balloon movement

It’s not just interpreting words — it’s translating intention.

5. Longer Video Durations

Kling now supports generating 5–8 second clips, which is a massive improvement from earlier models limited to 2–3 seconds.

This opens the door to:

Short ads Music video loops Scene previsualization Social storytelling And more

6. Multisubject and Multi-Object Support

V2.0 handles:

Two or more people interacting Animals + humans in motion Crowd movement with individual detail

This is a major leap from models that only supported solo subjects or failed when more than one thing moved onscreen.

7. Physics Simulation and Environment Logic

Water splashes, gravity pulls objects down, reflections bend across glass — Kling uses deep physical modeling to simulate how the world works.

This makes generated video feel less like “AI imagination” and more like visual science fiction grounded in realism.

How Kling V2.0 Compares to OpenAI’s Sora

Let’s address the obvious question:

Is Kling better than Sora?

The answer depends on what you value.

Feature

Kling V2.0

OpenAI Sora

Video Quality

Very high (1080p, realistic)

Very high (even higher res)

Access

Limited but expanding in China

Closed access (still internal)

Prompt Complexity

Strong, especially for short scenes

Extremely strong (multistep logic)

Frame Consistency

Impressive

Industry-leading

Motion Simulation

Realistic

Cinematic and smooth

Public Demo Availability

Shown widely by Kuaishou

Limited public samples only

Bottom line:

Kling is likely the most advanced public-facing AI video model available right now.

Sora might still lead technically — but Kling is already being applied at platform scale, which is arguably more disruptive.

Real-World Applications for Kling V2.0

1. Advertising and Commercials

Brands can generate:

Product showcase shots Logo animations Story-based campaigns

Without needing film crews, actors, or studios.

2. Music Videos and Visualizers

Artists can:

Translate lyrics into visual poetry Create animated loops or narrative clips Maintain style and vibe across visuals

With zero render farms or post-production teams.

3. Social Media Content

Creators can:

Post surreal, cinematic shorts Blend AI with existing footage Rapidly generate daily content in any niche

The result? More output, less cost.

4. Education and Training

Visualize:

Historical reenactments Scientific processes Technical explanations

In a motion-rich, emotionally engaging format.

5. Previsualization for Filmmaking

Directors and screenwriters can:

Mock up entire scenes Try different camera setups Test mood and pacing — all before production starts

This saves money, time, and creative energy.

6. Metaverse and Game Development

Teams can:

Auto-generate lore scenes Build emotional cutscenes Visualize environments or backstories

Even with no 3D modeling skills.

How Kling Fits into the Future of Generative Media

Kling is part of a larger trend:

The collapse of traditional production pipelines.

What used to require:

Teams of 20+ artists Weeks of editing Thousands of dollars in gear

…can now be initiated by one person with an idea and a well-crafted prompt.

Combined with AI voice (like ElevenLabs), sound design (like Soundraw), and motion graphics (like Runway), Kling is helping build an entire generative film stack.

We’re entering the era of solo studio creators — where video is no longer a hurdle, but a playground.

What’s Coming Next for Kling?

Based on the pace of development, here’s what we might expect from Kling V3.0 or future updates:

Voice + sound integration Longer narrative sequences (15–30s) Live prompt editing during generation Style transfer or reference-based motion generation AI-assisted editing and scene stitching Automatic subtitle + translation overlay

And possibly, a full creator suite built into the Kuaishou platform — allowing influencers and brands to go from script to screen entirely inside the app.

Conclusion: Kling Is Directing the Future

Kling V2.0 isn’t just another generative model.

It’s a signal — that AI video is maturing fast, and it’s no longer experimental.

It’s becoming emotional. Market-ready. Intuitive.

And whether you’re a solo creator or a media giant, Kling brings one thing clearly into focus:

The future of video isn’t filmed.

It’s generated.

FAQs about Kling V2.0

1. Who developed Kling?

Kling is built by Kuaishou Technology, the company behind China’s second-largest short video platform.

2. Is Kling free to use?

Access is currently limited, but Kuaishou is gradually opening it to creators and partners.

3. What kind of videos can Kling generate?

Kling can generate realistic short clips based on prompts, including people, objects, environments, and action.

4. How long are the videos Kling can create?

Up to 5–8 seconds in V2.0, with future versions expected to support longer sequences.

5. Does Kling require technical skills?

No. Anyone can use it by writing natural language prompts — no coding or editing required.

6. Can Kling videos be used commercially?

Usage rights depend on rollout region and platform terms — but quality-wise, Kling outputs are suitable for commercial applications.

7. Will Kling be integrated with Kuaishou’s apps?

Yes. Full integration into content creation pipelines for short-form video is already underway.

Leave a comment